What AI really means in supply chain planning

The word AI in supply chain planning gets thrown around everywhere and for everything.

I think this creates confusion and wrong expectations.

A forecasting model based on machine learning is called “AI.” A chatbot answering questions about stock levels is also called “AI.” Even a rule-based workflow triggering purchase orders seems to be branded as “agentic AI.”

Instead of lumping everything together, we can describe what kind of intelligence we’re talking about, and where it actually adds value:

- Machine learning for time-series forecasting and pattern detection.

- Optimization and heuristics for supply and inventory decisions.

- LLMs (Generative AI) for explaining, training, and translating human language into system logic.

- Automation or agentic AI for chaining workflows (hopefully not for the reasoning part).

By separating these layers, it becomes more clear where the real progress lies and where the hype starts.

If you look at a typical supply chain planning process, from collecting data to reporting results and making decisions, each step uses a very different kind of “intelligence”.

1. Data collection and preparation: (still) the unglamorous but crucial foundation

Everything starts with data: historical sales & master data.

This data sits in ERPs, Excels, and sometimes external files. Integrating them isn’t AI.

Where AI does start to help is in data validation and transformation:

- Identifying column types and mapping data structures (but this was possible without LLMs as well).

- Generating SQL or Python code to automate cleaning steps.

Here, LLMs can accelerate setup by writing code but a technical person is still needed. LLMs as such don’t replace technical pipelines or guarantee correctness: someone still needs to ensure the runs are reliable, every time.

2. Modeling, optimization, and machine learning

This is where machine learning shines, even if it’s no longer trendy to call it “AI.”

Techniques like XGBoost, LightGBM, or Prophet ensembles can find complex, non-linear patterns in demand data far beyond traditional statistical models. They learn from new data and that is intelligence.

But it’s not generative, not chat-based, and not “agentic.”

It’s math. And that is definitely not a bad thing.

The same goes for optimization and heuristics in supply planning.

Linear and mixed-integer programming have been powering production and distribution decisions for decades. They’re deterministic: if the inputs are right, the answer is exact.

No LLM will beat a solver at computing the optimal plan since it wasn’t built for that.

3. Learning and feedback

The next step is learning from outcomes.

If an external event (like a heatwave or promotion) shifts demand, machine learning models can be retrained with those signals. This feedback loop is what differentiates static statistical models from true AI-driven systems.

It’s still “classical AI”, not GenAI.

But it’s where the intelligence lives: understanding patterns, quantifying cause and effect, and improving automatically over time.

4. Planner/user interaction: where LLMs actually have value

Here’s where LLMs and GenAI add real value:

- Explaining why forecasts changed.

- Answering “why is this item out of stock?” in natural language.

- Creating training material or guiding new users through a process.

- Summarizing large scenario comparisons or building reports on demand.

In short, they make systems more understandable and accessible, which might be the biggest barrier to adoption in many companies.

LLMs are not the core engine. They’re the interface between humans and the engine.

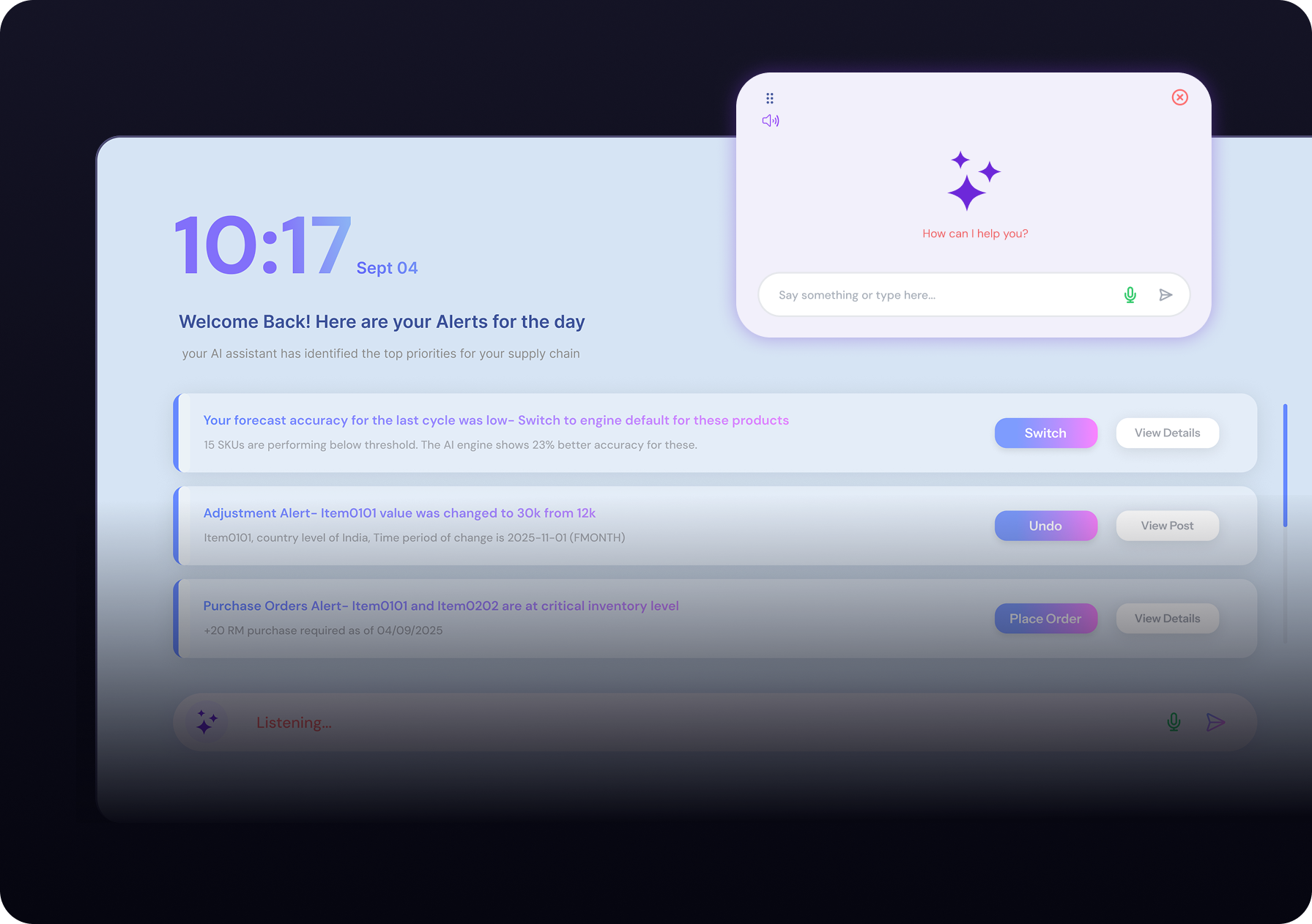

5. Automation and “agentic AI”: mostly old ideas with new packaging (for now)

Most of what’s now called agentic AI used to be called workflow automation.

If a forecast changes, run the optimizer, update the plan, and create a purchase order - these are chained actions triggered by data, not autonomous reasoning.

Today’s agents are good at surfacing alerts or nudging planners, not running the business unsupervised. And that’s probably for the best: one wrong calculation out of a hundred could cost millions.

Why the core won’t change soon

Planning models rely on math. Forecasting errors or infeasible supply plans have direct financial consequences. That’s why companies accept AI assistance but not AI autonomy.

LLMs still make simple reasoning mistakes. They’re impressive for text, unreliable for numbers. So, expecting them to “run the supply chain” is not just premature but it’s unsafe.

The near future is more likely a hybrid model:

- Machine learning for predictive accuracy.

- Optimization for decision precision.

- LLMs for usability, transparency, and training.

- Automation for routine execution.

Each layer contributes something, but not everything, and not everything is AI.

The real progress beyond the hype: accessibility and understanding

If we zoom out, the biggest change AI brings isn’t that the math got smarter (except for the fact that it did with machine learning, but everyone seems to take this for granted already).

It’s that planners can now talk to their systems, learn faster, and build trust in the numbers. Managers can get a report directly instead of waiting for it.

That accessibility is what will democratize advanced planning, not replacing planners or optimizers with agents, but giving people faster ways to use them and understand them.